Vibe coding MenuGen

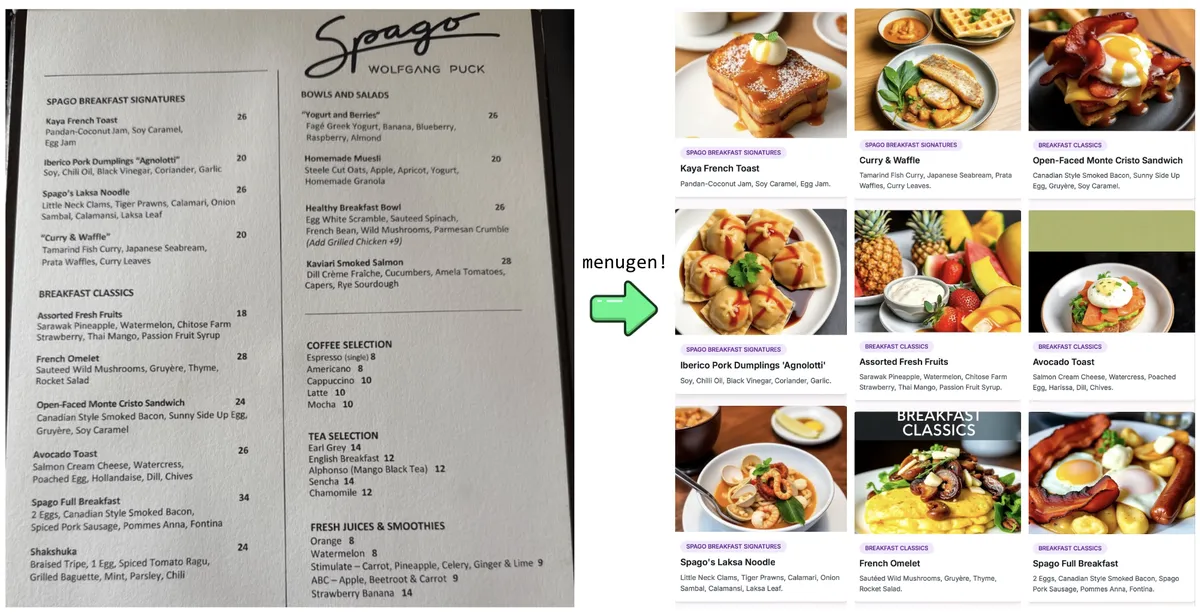

Very often, I sit down at a restaurant, look through their menu, and feel... kind of stuck. What is Pâté again? What is a Tagine? Cavatappi... that's a pasta right? Sweetbread sounds delicious (I have a huge sweet tooth). It can get really out of hand sometimes. "Confit tubers folded with matured curd and finished with a beurre noisette infusion." okay so... what is this exactly? I've spent so much of my life googling pictures of foods that when the time came to attend a recent vibe coding hackathon, I knew it was the perfect opportunity to finally build the app I always wanted, but could nowhere find. And here it is in flesh, I call it... 🥁🥁🥁 ... MenuGen:

MenuGen is super simple. You take a picture of a menu and it generates images for all the menu items. It visualizes the menu. Obviously it's not exactly what you will be served in that specific restaurant, but it gives you the basic idea: Some of these dishes are salads, this is a fish, this is a soup, etc. I found it so helpful in my personal use that after the hackathon (where I got the first version to work on localhost) I continued vibe coding a bit to deploy it, add authentication, payments, and generally make it real. So here it is, give it a shot the next time you go out :): menugen.app!

MenuGen is my first end-to-end vibe coded app, where I (someone who tinkers but has little to no actual web development experience) went from scratch all the way to a real product that people can sign up for, pay for, get utility out of, and where I pocket some good and honest 10% markup. It's pretty cool. But in addition to the utility of the app, MenuGen was interesting to me as an exploration of vibe coding apps and how feasible it is today. As such, I did not write any code directly; 100% of the code was written by Cursor+Claude and I basically don't really know how MenuGen works in the conventional sense that I am used to. So now that the project is "done" (as in the first version seems to work), I wanted to write up this quick post on my experience - what it looks like today for a non-webdev to vibe code a web app.

First, local version. In what is a relatively common experience in vibe coding, the very first prototype of the app running on my local machine took very little time. I took Cursor + Claude 3.7, I gave it the description of the app, and it wrote all the React frontend components very quickly, laying out a beautiful web page with smooth, multicolored fonts, little CSS animations, responsive design and all that, except for the actual backend functionality. Seeing a new website materialize so quickly is a strong hook. I felt like I was 80% done but (foreshadowing...) it was a bit closer to 20%.

OpenAI API. Around here is where some of the troubles started. I needed to call OpenAI APIs to OCR the menu items from the image. I had to get the OpenAI API keys. I had to navigate slightly convoluted menus asking me about "projects" and detailed permissions. Claude kept hallucinating deprecated APIs, model names, and input/output conventions that have all changed recently, which was confusing, but it resolved them after I copy pasted the docs back and forth for a while. Once the individual API calls were working, I immediately ran into some heavy rate limiting of the API calls, allowing me to only issue a few queries every 10 minutes.

Replicate API. Next, I needed to generate images given the descriptions. I signed up for a new Replicate API key and ran into similar issues relatively quickly. My queries didn't work because LLM knowledge was deprecated, but in addition, this time even the official docs were a little bit out of date due to recent changes in the API, which now don't return the JSON directly but instead some kind of a Streaming object that neither I or Claude understood. I then faced rate limiting on the API so it was difficult to debug the app. I was told later that these are common protection measures by these services to mitigate fraud, but they also make it harder to get started with new, legitimate accounts. I'm told Replicate is moving to a different approach where you pre-purchase credits, which might help going forward.

Vercel deploy. At this point at least, the app was working locally so I was quite happy. It was time to deploy the basic first version. Sign up for Vercel, add project, configure it, point it at my GitHub repo, push to master, watch a new Deployment build and... ERROR. The logs showed some linting errors due to unused variables and other basic things like that, but it was hard to understand or debug because everything worked fine on local and only broke on Vercel build, so I debugged the issues by pushing fake debugging commits to master to force redeploys. Once I fixed these issues, the site still refused to work. I asked Claude. I asked ChatGPT. I consulted docs. I googled around. 1 hour later I finally realized my silly mistake - My .env.local file stored the API keys to OpenAI and Replicate, but this file is (correctly!) part of .gitignore and doesn't get pushed to git, so you have to manually navigate to Vercel project settings, find the right place, and add your environment keys manually. I kind of understood the issue relatively quickly, but I could see an aspiring vibe coder get stuck on this for a while. Once the deployment finally succeeded, Vercel happily offered a URL. This surprised me again because my project was a private git repo that was not ready to see the light of day. I didn't realize that Vercel will take your !private! repo of an unfinished project and auto-deploy it on a totally public and easy to guess url just like that, hah.

Clerk authentication. Claude suggested that we use Clerk for authentication, so I went along with it. Signed up for Clerk, configured the project, got my API keys. At this point Claude hallucinated about 1000 lines of code that appeared to be deprecated Clerk APIs. I had to copy paste a lot of the docs back and forth to get things gradually unstuck. Next, so far, Clerk was running in a "Development" deployment. To move to a "Production" deployment, there were more hoops to jump through. Clerk demands that you host your app on a custom domain that you own. menugen.vercel.com will not work. So I had to purchase the domain name menugen.app. Then I had to wire the domain to my Vercel project. Then I had to change the DNS records. Then I had to pick an OAuth provider, e.g. I went with Google. But to do that was its own configuration adventure . I had to enable an "SSO connection". I had to go over to Google Cloud Console and create a new project, and add a new OAuth Credential. I had to wait some time for an approval process around here. I then had to go back and forth between the nested settings of all of Vercel, Clerk and Google for a while to wire it up properly. I thought of quitting the project around here, but I felt better when I woke up the next morning.

Stripe payments. Next I wanted to add payments so that people can purchase credits. This means another website, another account, more docs, more keys. I select "Next.js" as the backend, copy paste the very first snippet of code from the "getting started" docs into my app and... ERROR. I realized later that Stripe gives you JavaScript code when you select Next.js, but my app is built in TypeScript, so every time I pasted a snippet of code it made Cursor unhappy with linter errors, but Claude patched things up ok over time after I told it to "fix errors" a few times and after I threatened to switch to ChatGPT. Then back in the Stripe dashboard we create a Product, we create a Price, we find the price key (not the product key!), copy paste all the keys around. Around here, I caught Claude using a really bad idea approach to match up a successful Stripe payment to user credits (it tried to match up the email addresses, but the email the user might give in the Stripe checkout may not be the email of the Google account they signed up with, so the user might not actually get the credits that they purchased). I point this out to Claude and it immediately apologizes and rewrites it correctly by passing around unique user ids in the request metadata. It thanks me for pointing out the issue and tells me that it will do it correctly in the future, which I know is just gaslighting. But since our quick test works, only a few more clicks to upgrade the deployment from Development to Production, now re-do a new Product, redo a new Price, re-copy paste all the keys and ids, locally and in the Vercel settings... and then it worked :)

Database? Work queues? So far, all of the processing is done "in the moment" - it's just requests and results right there and then, nothing is cached, saved, or etc. So the results are ephemeral and if the response takes too long (e.g. because the menu is too long and has too many items, or because the APIs show too much latency), the request can time out and break. If you refresh the page, everything is gone too. The correct way to do this is to have a database where we register and keep track of work, and the client just displays the latest state as it's ready. I realized I'd have to connect a database from the Marketplace, something like Supabase PostgreSQL (even when Claude pitched me on using Vercel KV, which I know is actually deprecated). And then we'd also need some queue service like Upstash or so to run the actual processing. It would mean more services. More logins. More API keys. More configurations. More docs. More suffering. It was too much bear. Leave as future work.

TLDR. Vibe coding menugen was exhilarating and fun escapade as a local demo, but a bit of a painful slog as a deployed, real app. Building a modern app is a bit like assembling IKEA future. There are all these services, docs, API keys, configurations, dev/prod deployments, team and security features, rate limits, pricing tiers... Meanwhile the LLMs have slightly outdated knowledge of everything, they make subtle but critical design mistakes when you watch them closely, and sometimes they hallucinate or gaslight you about solutions. But the most interesting part to me was that I didn't even spend all that much work in the code editor itself. I spent most of it in the browser, moving between tabs and settings and configuring and gluing a monster. All of this work and state is not even accessible or manipulatable by an LLM - how are we supposed to be automating society by 2027 like this?

Going forward. As an exploration of what it's like to vibe code an app today if you have little to no web dev background, I'm left with an equal mix of amazement (it's actually possible and much easier/faster than what was possible before!) and a bit of frustration of what could be. Part of the pain of course is that none of this infrastructure was really designed to be used like this. The intended target audience are teams of professional web developers living in a pre-LLM world. Not vibe coding solo devs prototyping apps. Some thoughts on solutions that could make super simple apps like MenuGen a lot easier to create:

- Some app development platform could come with all the batteries included. Something that looks like the opposite of Vercel Marketplace. Something opinionated, concrete, preconfigured with all the basics that everyone wants: domain, hosting, authentication, payments, database, server functions. If some service made these easy and "just work" out of the box, it could be amazing.

- All of these services could become more LLM friendly. Everything you tell the user will be basically right away copy pasted to an LLM, so you might as well talk directly to the LLM. Your service could have a CLI tool. The backend could be configured with curl commands. The docs could be Markdown. All of these are ergonomically a lot friendlier surfaces and abstractions for an LLM. Don't talk to a developer. Don't ask a developer to visit, look, or click. Instruct and empower their LLM.

- For my next app I'm considering rolling with basic HTML/CSS/JS + Python backend (FastAPI + Fly.io style or so?), something a lot simpler than the serverless multiverse of "modern web development". It's possible that a simple app like MenuGen (or apps like it) could have been significantly easier in that paradigm.

- Finally, it's quite likely that MenuGen shouldn't be a full-featured app at all. The "app" is simply one call to GPT to OCR a menu, and then a for loop over results to generate the images for each item and present them nicely to the user. This almost sounds like a simple custom GPT (in the terminology of the original GPT "app store" that OpenAI released earlier). Could MenuGen be just a prompt? Could the LLM respond not with text but with a simple webpage to present the results, along the lines of Artifacts? Could many other apps look like this too? Could I publish it as an app on a store and earn markup in the same way?

For now, I'm pretty happy to have vibe coded my first super custom app through the finish line of something that is real, solves a need I've had for a long time, and is shareable with friends. Thank you to all the services above that I've used to build it. In principle, it could earn some $ if others like it too, in a completely passive way - the @levelsio dream. Ultimately, vibe coding full web apps today is kind of messy and not a good idea for anything of actual importance. But there are clear hints of greatness and I think the industry just needs a bit of time to adapt to the new world of LLMs. I'm personally quite excited to see the barrier to app drop to ~zero, where anyone could build and publish an app just as easily as they can make a TikTok. These kinds of hyper-custom automations could become a beautiful new canvas for human creativity.

The companion tweet (and the "comments section") is on my X @karpathy.